NASA / JPL-Caltech

The Mars Robot Making Decisions on Its Own

Thanks to artificial-intelligence software, the Curiosity rover can target rocks without human input.

In 2012, the Curiosity rover began its slow trek across the surface of Mars, listening for commands from Earth about where to go, what to photograph, which rocks to inspect. Then last year, something interesting happened: Curiosity started making decisions on its own.

In May last year, engineers back at NASA installed artificial-intelligence software on the rover’s main flight computer that allowed it to recognize inspection-worthy features on the Martian surface and correct the aim of its rock-zapping lasers. The humans behind the Curiosity mission are still calling the shots in most of the rover’s activities. But the software allows the rover to actively contribute to scientific observations without much human input, making the leap from automation to autonomy.

In other words, the software—just about 20,000 lines of code out of the 3.8 million that make Curiosity tick—has turned a car-sized, six-wheeled, nuclear-powered robot into a field scientist.

And it’s good, too. The software, known as Autonomous Exploration for Gathering Increased Science, or AEGIS, selected inspection-worthy rocks and soil targets with 93 percent accuracy between last May and this April, according to a study from its developers published this week in the journal Science Robotics.

AEGIS works with an instrument on Curiosity called the ChemCam, short for chemistry and camera. The ChemCam, a one-eyed, brick-shaped device that sits atop the rover’s spindly robotic neck, emits laser beams at rocks and soil as far as 23 feet away. It then uses the light coming from the impacts to analyze and detect the geochemical composition of the vaporized material. Before AEGIS, when Curiosity arrived at a new spot, ready to explore, it fired the laser at whatever rock or soil fell into the field of view of its navigation cameras. This method certainly collected new data, but it wasn’t the most discerning way of doing it.

With AEGIS, Curiosity can search and pick targets in a much more sophisticated fashion. AEGIS is guided by a computer program that developers, using images of the Martian surface, taught to recognize the kind of rock and soil features that mission scientists want to study. AEGIS examines the images and finds targets that resemble set parameters, ranking them by how closely they match what the scientists asked for. (It’s not perfect; AEGIS can sometimes include a rock’s shadow as part of the object.)

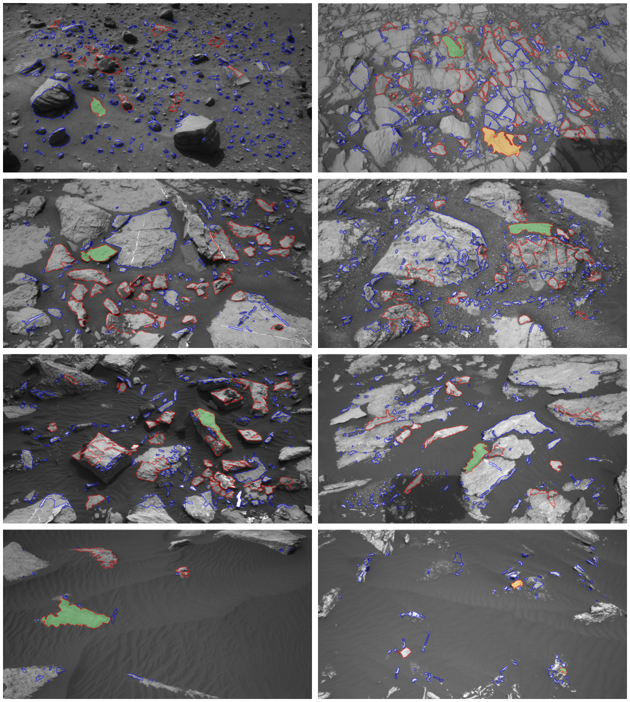

Here’s how Curiosity’s cameras see the Martian landscape with AEGIS. The targets outlined in blue were rejected, the red are potential candidates. The best targets are filled in with green, and the second-best with orange:

When AEGIS settles on a preferred target, ChemCam zaps it.

AEGIS also helps ChemCam with its aim. Let’s say operators back on Earth want the instrument to target a specific geological feature they saw in a particular image. And let’s say that feature is a narrow mineral vein carved into bedrock. If the operators’ commands are off by a pixel or two, ChemCam could miss it. They may not get a second chance to try if Curiosity’s schedule calls for it so start driving again. AEGIS corrects ChemCam’s aim in human-requested observations and its own search.

These autonomous activities have allowed Curiosity to do science when Earth isn’t in the loop, says Raymond Francis, the lead system engineer for AEGIS at NASA’s Jet Propulsion Laboratory in California. Before AEGIS, scientists and engineers would examine images from Curiosity, determine further observations, and then send instructions back to Mars. But while Curiosity is capable of transmitting large amounts of data back to Earth, it can only do so under certain conditions. The rover can only directly transmit data to Earth for a few hours of day because it saps power. It can also transmit data to orbiters circling Mars, which will then kick it over to Earth, but the spacecraft only have eyes on the rover for about two-thirds of the Martian day.

“If you drive the rover into a new place, often that happens in the middle of the day, and then you’ve got several hours of daylight after that when you could make scientific measurements. But no one on Earth has seen the images, no one on Earth knows where the rover is yet,” Francis says. “We can make measurements right after the drives and send them to Earth, so when the team comes in the next day, sometimes they already have geochemical measurements of the place the rover’s in.”

Francis said there was at first some hesitation on the science side of the mission when AEGIS was installed. “I think there’s some people who imagine that the reason we’re doing this is so that we can give scientists a view of Mars, and so we shouldn’t be letting computers make these decisions, that the wisdom of the human being is what matters here,” he said. But “AEGIS is running during periods when humans can’t do this job at all.”

AEGIS is like cruise control for rovers, Francis said. “Micromanaging the speed of a car to the closest kilometer an hour is something that a computer does really well, but choosing where to drive, that’s something you leave to the human,” he said.

There were some safety concerns in designing AEGIS. Each pulse from ChemCam’s laser delivers more than 1 million watts of power. What if the software somehow directed ChemCam to zap the rover itself? To protect against that disastrous scenario, AEGIS engineers made sure the software was capable of recognizing the exact position of the rover during its observations. “When I give talks about this, I say we have a rule that says, don’t shoot the rover,” Francis says. AEGIS is also programmed to keep ChemCam’s eye from pointing at the sun, which could damage the instrument.

In many ways, it’s not surprising that humanity has a fairly autonomous robot roaming another planet, zapping away at rocks like a nerdy Wall-E. Robots complete far more impressive tasks on Earth. But Curiosity is operating in an environment no human can control. “In a factory, you can program a robot to move in a very exact way over to a place where it picks up a part and then moves again in a very exact way and places it onto a new car that’s being built,” Francis says. “You can be assured that it will work every time. But when you’re in a space exploration context, literally every time AEGIS runs it’s in a place no one has ever seen before. You don’t always know what you’re going to find.”

Francis says the NASA’s next Mars rover, scheduled to launch in 2020, will leave Earth with AEGIS already installed. Future robotic missions to the surfaces or even oceans of other worlds will need it, too. The farther humans send spacecraft, the longer it will take to communicate with them. The rovers and submarines of the future will spend hours out of Earth’s reach, waiting for instructions.

Why not give them something to do?

NEXT STORY: How Not to Win an AI Arms Race With China