Study raises questions about military’s brain injury assessment tool

At issue is determining which instrument works best for evaluating mild injuries that can lead to serious impairment down the road.

Joe Radle/Getty Images

Senior Defense Department officials have stressed repeatedly in public they are doing everything they can to provide the best care possible to U.S. troops injured in Iraq and Afghanistan. But that might not be the case for the tens of thousands of troops who have experienced some form of brain injury.

Four years ago, a group of Air Force doctors treating wounded soldiers at field hospitals in Iraq sought a better way to evaluate the impact of blast injuries on soldiers' brains when there were no visible head wounds -- a condition known as mild traumatic brain injury. Mild TBI can be deceptive, because it often occurs without any outward signs of trauma. A soldier can recover completely from mild TBI, but left undiagnosed and untreated, it can lead to serious impairment over time, especially if the individual is exposed to additional blasts later on.

Lacking an adequate tool to help determine when it was safe to send soldiers back to combat, Air Force doctors in 2006 began using an off-the-shelf, Web-enabled assessment tool called the Cognitive Stability Index, developed by a small, New York-based company called Headminder. At least one of the Air Force doctors had used it before, liked it, and believed it could work well in the field.

The CSI was developed in 1999 by David Erlanger, a neuropsychologist, to measure deterioration, improvement or stability in people whose brain function has been compromised, either through illness, disease or injury. It has been used in a number of drug research studies and clinical settings and has a good track record, doctors unaffiliated with Headminder told Government Executive .

The Air Force doctors in Iraq were impressed enough with the CSI's clinical performance in the field that they sought and received approval from a military institutional review board to conduct a scientific study comparing the CSI to two other tools the military uses: a computer-based tool the Army developed in 1984, called the Automated Neuropsychological Assessment Metrics; and a basic screening tool developed by military medical personnel in 2006 called the Military Acute Concussion Evaluation. The study compared all three instruments to a paper-and-pencil tool called the Repeatable Battery for Assessment of Neuropsychological Status, and was conducted from late 2006 through August 2007 on troops who had lost consciousness at least briefly, because that was the only way in battlefield conditions to know if a soldier had in fact sustained a concussion. RBANS served as the standard against which the others were measured, because it is used widely in the Veterans Affairs Department and private institutions and is considered a reliable measure of cognitive ability.

This was the first combat study of its kind approved by an institutional review board, a panel of experts charged with ensuring that biomedical research involving human subjects meets federal regulations. It required a deep commitment from the military medical personnel who conducted it under difficult circumstances on the battlefield. According to three of the doctors directly involved in the study and an outside adviser, the data they collected showed that CSI correlated closely with RBANS, the standard, and worked notably better than the Army's ANAM in important respects, particularly in measuring blast effects on memory and attention. The data showed that the Military Acute Concussion Evaluation had limited utility.

What happened next with the study is the subject of some disagreement among those with knowledge of it. Doctors involved told Government Executive the study was "put on hold" by senior Air Force officials, although none of the physicians or psychologists had direct knowledge of the reason. Two military officials familiar with the study said it essentially was dropped after the chief executive of PanMedix, the company that developed the electronic backbone of CSI and Headminder's partner, alienated key Air Force officials by aggressively promoting the initial findings. Another Air Force official told Government Executive the study was "blocked" by senior Air Force and Army officials who favored using ANAM, which didn't perform well in the study, but essentially was free because the Army developed it.

Officials at the Defense Centers of Excellence for Psychological Health and Traumatic Brain Injury, or DCoE, declined repeated requests for interviews from Government Executive . But they did issue a written statement saying the study was not dropped, but instead was "completed after enrolling the number of patients approved by the institutional review board."

What is undisputed is the data from the 2006-2007 combat study has never been published in a peer-reviewed journal, an essential step in validating the teams' findings. Without such review by disinterested peer scientists, the doctors' findings and conclusions are in limbo.

Jeffrey Barth, chief of medical psychology at the University of Virginia School of Medicine and medical director of the school's Neuropsychology Assessment Laboratories, is an expert in mild traumatic brain injury and served as an outside adviser on the study. He said he doesn't know why the study was never published, or if it was even completed. "We certainly had sufficient data to put together [a manuscript for] publication," he said. "All I know is we were writing an article and the last I heard about it [was] in the middle of 2008."

In a May 28, 2008, memo, then-assistant secretary of Defense for health affairs Dr. S. Ward Casscells directed all three service chiefs to immediately begin using ANAM as the assessment tool of choice "until ongoing studies to obtain evidence-based outcomes of various neurocognitive assessment tools are completed."

"The issue here would be, did the DoD know there was a superior product available?" Barth said. He doesn't know the answer to that. "This particular paper these folks were writing, which I was reviewing and being a consultant on, did show in a sort of head-to-head evaluation that CSI from Headminder did a better job in correlation with the RBANS than the ANAM did," he said. If the study had been sent out for review, "and if those peers … thought that the findings were correct … that would at least be one piece of information the Department of Defense should want to utilize in determining what they're going to use in theater."

In a May 24 interview with Government Executive , Casscells said he was not aware of the study that showed CSI to outperform ANAM and MACE. In issuing the directive to the services mandating the use of ANAM, Casscells said he relied on the advice of then-Lt. Col. Michael Jaffee, an Air Force neurologist who now is the national director of the Defense and Veterans Brain Injury Center, and other experts from the Defense Health Board. Jaffee declined through a spokeswoman to be interviewed for this article.

"I do have confidence that all this is done in open committees without any bias," Casscells said. "Having said that, doctors have all kinds of things that they favor. They have their pet theories and pet devices they develop, et cetera. That's why it's important to have fairly large groups looking at this and I think we had a large group looking at that [ANAM decision]." Casscells is now the John E. Tyson Distinguished Professor of Medicine and Public Health and Vice President for External Affairs and Public Policy at the University of Texas Health Science Center in Houston.

According to the DCoE statement, "The DoD and [Defense and Veterans Brain Injury Center] have encouraged an open academic review and publication of this data." Headminder's Erlanger, who was a co-investigator in the study, disputes that, saying, "In my opinion, the DoD and [Defense and Veterans Brain Injury Center] actively discouraged completion and publication of this study." In addition, some Air Force doctors involved told Government Executive they were prohibited from continuing to work with Headminder, although none knew why.

While the Defense and Veterans Brain Injury Center, which is part of DCoE, has convened a number of panels in recent years to consider blast-concussion injury, Erlanger said, "They always have invited ANAM [representatives] to present to these consortium panels and have never invited me or asked any of my co-authors for presentation and discussion of any of the data we collected in Iraq. They have simply tried to pretend that the research never happened. This is hardly encouraging authors to present."

U.S. Navy

Dr. S. Ward Casscells, former assistant secretary of Defense for health affairs, says he was unaware of the study that showed CSI to outperform two other brain injury screening tools.

A New Study

According to the statement from Defense, "The DoD is committed to selecting the best available [neurocognitive assessment tool] for use by the DoD population and has invited all companies to participate in an objective head-to-head evaluation."

Defense now expects to launch this new study in August and complete it by November 2013. The DCoE statement said the department is working with the National Academy of Neuropsychology "to have an independent study advisory committee of acknowledged [neurocogitive assessment tool] experts who have no incentive or affiliation with any of the commercial NCAT companies." An independent company will analyze the data and "study results will be published in peer-reviewed journals and presented to policymakers in order to inform future decision-makers."

The CSI will not be included in the evaluation, however. According to PanMedix CEO Don Comrie, the company has no confidence that DoD will conduct a fair or appropriate head-to-head study, in part because all the instruments initially selected for head-to-head evaluation, including one developed by Headminder called the Concussion Resolution Index, were designed to assess sports-related injury. Defense officials did not select CSI for the study, Comrie said, and his company will not participate using its sports-injury product, which it does not believe adequately measures more devastating combat injuries.

Although blast injuries share some characteristics with sports injuries, they differ in significant ways, UVA's Barth said. Barth pioneered sports injury research in a groundbreaking study in the 1980s that assessed the impact of concussion on more than 2,300 football players over time. Contact sports typically lead to two types of brain injury: blunt injury, such as when a helmet smashes into a head; and rotational injury where the brain turns inside the skull, such as when a player gets hit from the back and the front at the same time, or hit from the side, creating a whiplash effect, he said.

Blast injuries typically include four elements: The primary characteristic is the atmospheric pressure created by the blast wave, followed by a vacuum. The wave pushes, then the vacuum pulls. The effect on the brain is not entirely clear, but it can wreak havoc with brain and spinal fluid, creating tiny bubbles that affect the vascular system, Barth said.

A secondary effect is created by rocks and other items that can be blown into a person standing near the blast -- similar to the blunt trauma in sports injury. A third effect is the force of the blast can pick up a person throw him or her around -- also similar to the rotational brain injuries found in sports.

"Then there's a [fourth] level of injury, which is the toxic fumes and so on that may occur in the blast," Barth said. The toxins can affect brain tissue, increase a soldiers' blood pressure and leave burns.

While the military can learn a lot from the treatment of concussive injury in sports, the differences are important, Barth said. He said he didn't know why Defense would exclude CSI from the new head-to-head study of cognitive assessment tools. "To me it makes more sense to use the CSI," because it can pick up a broader range of cognitive impairment than the company's sports concussion tool, which he also thinks highly of and uses to assess concussion injuries with the university's football team.

One of the things that makes Headminder's tools particularly valuable is they are Web-enabled, meaning medical professionals can access historical data anywhere they can get an Internet connection, allowing them to instantly compare post-injury brain functioning with any data collected earlier, Barth said.

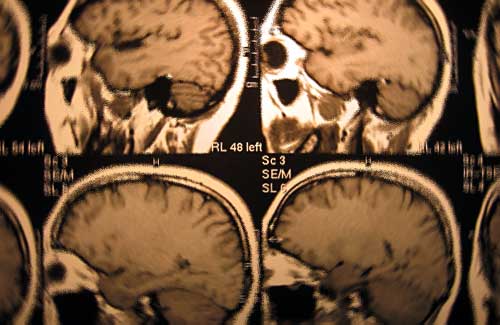

stock.xchng user kilokilo

Brain injury can be present even when CAT scans appear normal, military doctors say.

Creating a Baseline

In the 2008 National Defense Authorization Act, Congress required the Pentagon to develop and deploy "a system of pre-deployment and post-deployment screenings of cognitive ability in members for the detection of cognitive impairment." The law also required Defense "to assess for and document traumatic brain injury by establishing a protocol for a computer-based, pre-deployment neurocognitive assessment (to include documentation of memory), to create a baseline for members of the armed forces for future treatment."

The May 2008 memo from Casscells required the services to use the Army's Automated Neuropsychological Assessment Metrics to create such a baseline. But there are a number of problems with the way that decision has been implemented, several experts said. To date, the department has conducted more than 500,000 assessments with ANAM. But according to the medical literature and a contractor involved in administering ANAM, each soldier would need five separate assessments to create a stable baseline for that individual. In most cases, soldiers are receiving a single pre-deployment assessment, raising doubts about the reliability of the data being collected.

In addition, many of the assessments are being conducted just weeks or days before a soldier deploys, when he or she has many other priorities and might be too distracted to perform "normally" as would be necessary in a baseline test.

Finally, ANAM is not Web-enabled, rendering the baseline data useless to medical personnel in Iraq and Afghanistan, several medical professionals told Government Executive . In a statement responding to that criticism, Defense said, "Data is available electronically in theater to assist in [return-to-duty evaluation decisions]. Currently, this is being done through an electronic and telephone help desk systems [sic]."

Casscells said Defense set up both internal and external panels of experts to review the experience with the ANAM, the idea being it would evolve as needed over time. "Almost as soon as we launched it there were people saying it's not perfect, so my assumption is there are going to be proposals to improve upon it," he said.

A Way Forward

Dr. Gerald Grant, the Air Force neurosurgeon who led the combat study in 2006, said he hasn't given up on being able to publish the data he and his colleagues collected under extremely difficult circumstances. Besides publishing the earlier findings in a peer-reviewed journal, he would like to follow up with the nearly 100 soldiers assessed in the original combat study and measure their progress since then. Last summer he applied for approval to follow up from an institutional review board, but he hasn't received permission yet.

Grant retired from the Air Force after he returned from Iraq in 2006. Now an associate professor of pediatric neurosurgery at Duke University Medical Center in Durham, N.C., he told Government Executive , "I still believe in the data." He said he has begun working with some of the other doctors involved in the study and expects to finalize a manuscript within the next few weeks. "It has to be approved by the Air Force and the Army [prior to publishing in a peer review journal], and I don't know if they'll do that," he said.

"I'm committed to get this out. We owe it to the soldiers," he said.

Response to this article from Michael Lutz, Chairman and COO, Vista Partners, Inc./Vista LifeSciences